LLMs develop their own understanding of reality as their language abilities improve

Ask a large language model (LLM) like GPT-4 to smell a rain-soaked campsite, and it will politely decline. However, ask it to describe that scent, and it will eloquently talk about “an air thick with anticipation" and “a scent that is both fresh and earthy," even though it has never experienced rain or has a nose to make such observations. One possible explanation for this is that the LLM is simply mimicking the text from its vast training data, rather than having any real understanding of rain or smell.

But does the lack of eyes mean that language models can never "understand" that a lion is "larger" than a house cat? Philosophers and scientists have long considered the ability to assign meaning to language a key part of human intelligence and have wondered what essential elements allow us to do so.

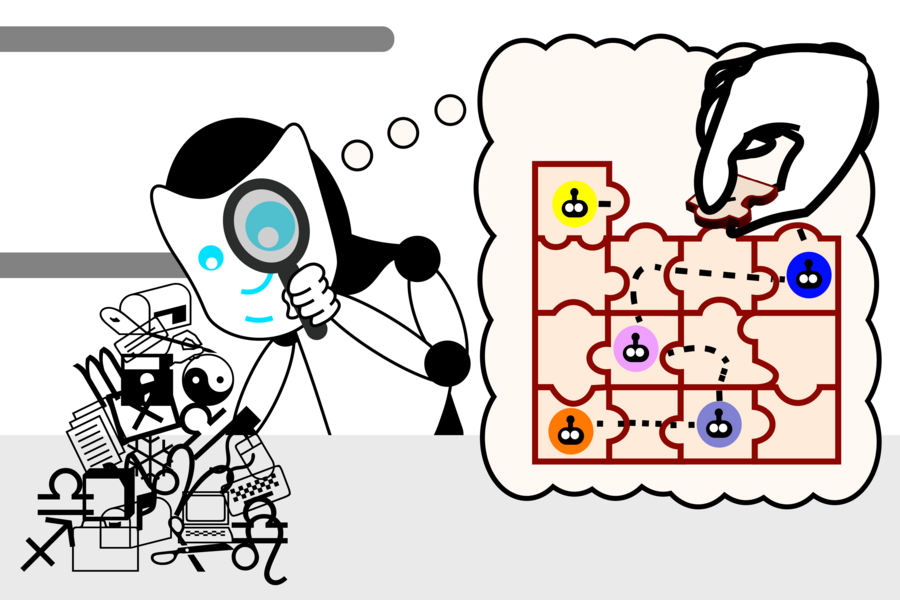

Exploring this mystery, researchers from MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) have found interesting results suggesting that language models might develop their own understanding of reality to enhance their ability to generate text. The team first created a set of small Karel puzzles, which involved coming up with instructions to control a robot in a simulated environment. They then trained a language model on the solutions without showing how the solutions actually worked. Finally, using a machine learning technique called "probing," they examined the model's "thought process" as it generated new solutions.

After training on over 1 million random puzzles, they discovered that the model developed its own understanding of the underlying simulation, even though it was never exposed to this reality during training. These findings challenge our assumptions about what information is needed to learn linguistic meaning and whether LLMs might someday understand language more deeply than they do now.

“At the start of these experiments, the language model generated random instructions that didn’t work. By the time we completed training, our language model generated correct instructions at a rate of 92.4 percent,” says MIT electrical engineering and computer science (EECS) PhD student and CSAIL affiliate Charles Jin, who is the lead author of a new paper on the work. “This was a very exciting moment for us because we thought that if a language model could complete a task with that level of accuracy, it might also understand the meanings within the language. This gave us a starting point to explore whether LLMs do in fact understand text, and now we see that they’re capable of much more than just blindly stitching words together.”

Inside the Mind of an LLM

The probe allowed Jin to see this progress directly. Its job was to interpret what the LLM thought the instructions meant, revealing that the LLM created its own internal simulation of how the robot moves in response to each instruction. As the model got better at solving puzzles, these ideas also became more accurate, showing that the LLM was beginning to understand the instructions. Soon, the model was consistently assembling the pieces correctly to create working instructions.